Rate limiting is an important concept when it comes to web development. A server can limit the number of request for a number of reasons. The rate limit could be set per client based on their subscription package or based on the server’s capacity. When the limit is reached, the server will start handing out 429 ‘Too many request’ response status.

When it comes to server side rate limiting AspNetCoreRateLimit is a well know nuget package. It provides a lot of easy configuration options. When it came to client side rate limiting, I did not find any packages or much information about it. In this blog post we will explore some async client side rate limiting options. This is so we can make requests as soon as possible but at the same time not hit the server’s request limit.

Server-side rate limit – setup

To setup the scene lets create a rate limiting server, this is so we can see the rate limit in action on our local machine. Create a new .netcore WebAPI project. This should create the default Weather Forecast controller with the GetWeatherForcast action. Then install the AspNetCoreRateLimit nuget package and follow the setup steps here. These steps add the IpRateLimiting middleware and configure the in memory cache. There is a Redis package too, but the memory implementation is good for our testing.

My .Net6 Program.cs now looks like this:

using AspNetCoreRateLimit;

var builder = WebApplication.CreateBuilder(args);

ConfigurationManager configuration = builder.Configuration;

// Add services to the container.

builder.Services.AddControllers();

// Learn more about configuring Swagger/OpenAPI at https://aka.ms/aspnetcore/swashbuckle

builder.Services.AddEndpointsApiExplorer();

builder.Services.AddSwaggerGen();

builder.Services.AddOptions();

builder.Services.AddMemoryCache();

builder.Services.Configure<IpRateLimitOptions>(configuration.GetSection("IpRateLimiting"));

builder.Services.Configure<IpRateLimitPolicies>(configuration.GetSection("IpRateLimitPolicies"));

builder.Services.AddInMemoryRateLimiting();

builder.Services.AddSingleton<IRateLimitConfiguration, RateLimitConfiguration>();

var app = builder.Build();

// Configure the HTTP request pipeline.

if (app.Environment.IsDevelopment())

{

app.UseSwagger();

app.UseSwaggerUI();

}

app.UseIpRateLimiting();

app.UseHttpsRedirection();

app.UseAuthorization();

app.MapControllers();

app.Run();

and I’ve put a very simple server-side rate limit in the appsettings.json. I’ve limited any url to a maximum of 5 request every 20 seconds. This is to make our testing very quick. My appsetting.json now looks like

{

"Logging": {

"LogLevel": {

"Default": "Information",

"Microsoft.AspNetCore": "Warning"

}

},

"AllowedHosts": "*",

"IpRateLimiting": {

"EnableEndpointRateLimiting": false,

"StackBlockedRequests": false,

"RealIpHeader": "X-Real-IP",

"ClientIdHeader": "X-ClientId",

"HttpStatusCode": 429,

"EndpointWhitelist": [ "get:/api/license", "*:/api/status" ],

"GeneralRules": [

{

"Endpoint": "*",

"Period": "20s",

"Limit": 5

}

]

}

}

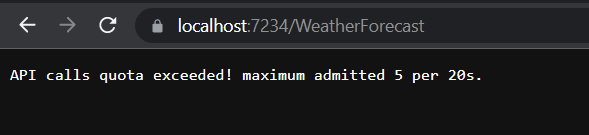

And Vola! our server is now rate limited. We can easily test this by running the project in Visual Studio and browsing to “https://localhost:7234/WeatherForecast” or the port that VS chooses for your project. Browse to the weather forecast url 5 times and on the 6th request attempt you should see the rate limit message like:

Client-side rate limiting – setup

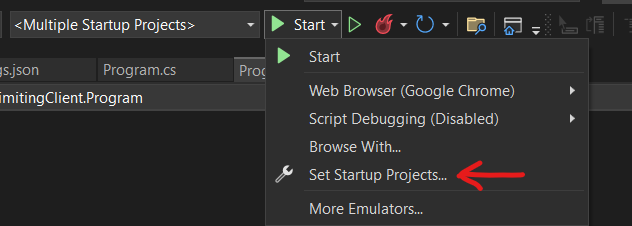

Let’s start off by reproducing the issue. For this, create a Console application in the same solution. We are going to update the solution so they both run in VS when we click the Start button. To do this click on the drop down arrow beside the Start button and select ‘Set Startup Project’, like this

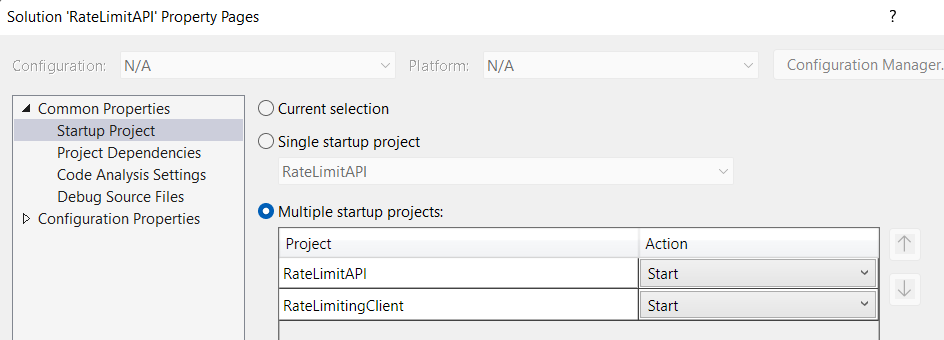

And then make sure both projects have action ‘Start’. Now when we click the Start button, both projects should run and open a console for the client and server

Next thing we want to do is introduce a small delay, about 5 seconds at the start of our client application so our webapi server can start up. Then we can start off by using a simple for loop and see the outcome.

I’ve created an async method called CallServer, this will make the the API calls using HttpClient and do some basic logging. It only takes one parameter which is the iteration count, so we can make sure all the iterations ran and see their results. The initial implementation with the for loop should look like this:

using System;

using System.Net.Http;

using System.Threading;

using System.Threading.Tasks;

namespace RateLimitingClient

{

internal class Program

{

static readonly HttpClient client = new HttpClient();

static async Task Main(string[] args)

{

Thread.Sleep(5000);

for (int i = 0; i < 20; i++)

{

await CallServer(i);

}

Console.WriteLine("All done");

Console.ReadLine();

}

static async Task CallServer(int iterationCount)

{

try

{

var result = await client.GetAsync("https://localhost:7234/WeatherForecast");

var content = await result.Content.ReadAsStringAsync();

Console.WriteLine($"{iterationCount}: Normal: {content}");

}

catch (Exception ex)

{

Console.WriteLine($"{iterationCount}: Error: {ex}");

}

}

}

}

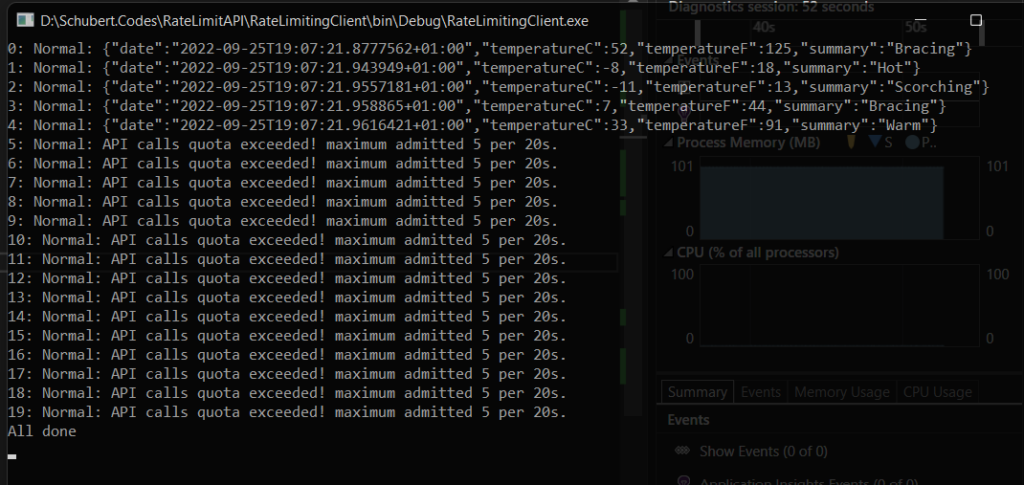

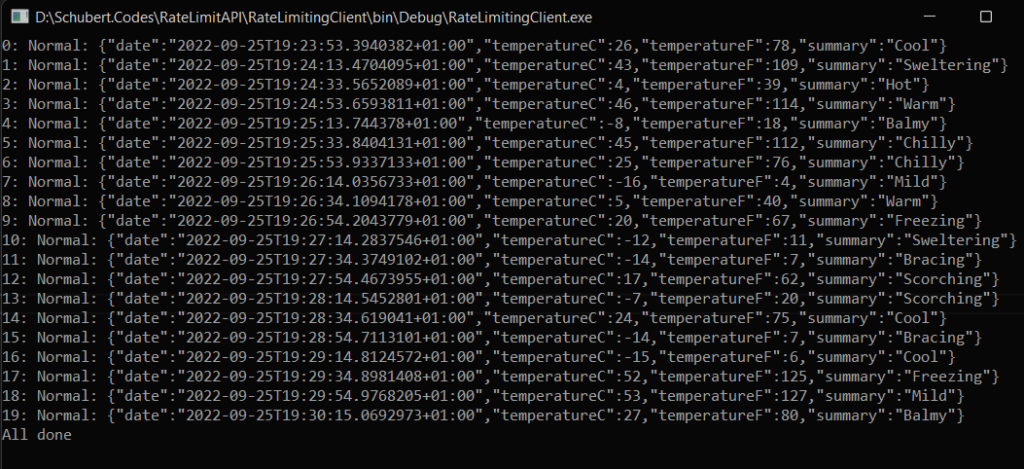

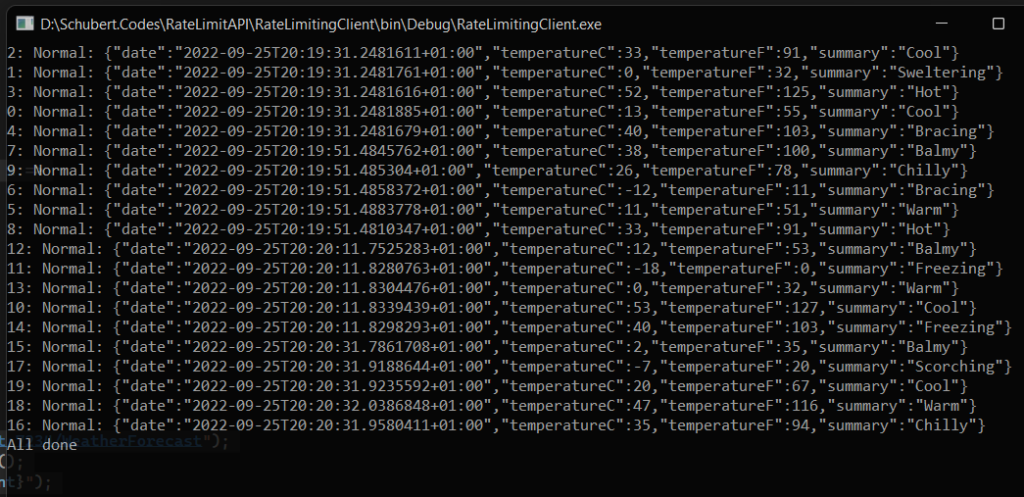

Click Run and we should see our rate limiting in action. The server did well and blocked the request but bad for the client as we hit the limit. Below is the console output for the client but we can see a similar picture on the server console

Client side rate limiting – task delay

This time we are going to run the same client code with one small change, we are going to introduce a 20 seconds delay after each request. We land up with something like this:

using System;

using System.Collections.Generic;

using System.Net.Http;

using System.Threading;

using System.Threading.Tasks;

namespace RateLimitingClient

{

internal class Program

{

static readonly HttpClient client = new HttpClient();

static async Task Main(string[] args)

{

Thread.Sleep(5000);

for (int i = 0; i < 20; i++)

{

await CallServer(i);

}

Console.WriteLine("All done");

Console.ReadLine();

}

static async Task CallServer(int iterationCount)

{

try

{

var result = await client.GetAsync("https://localhost:7234/WeatherForecast");

var content = await result.Content.ReadAsStringAsync();

Console.WriteLine($"{iterationCount}: Normal: {content}");

await Task.Delay(20000);

}

catch (Exception ex)

{

Console.WriteLine($"{iterationCount}: Error: {ex}");

}

}

}

}

Whilst we did not hit the rate limit on the server, this took too long from the clients side. It slept for 20 seconds after each request, meaning the whole run took 20 request x 20 seconds = 400 seconds. Or from my live run from 19:23 to 19:30, just under 7 minutes

Client side rate limiting – AsyncEnumerator

To speed things up, let get the calls to run in Parallel. One well know nuget packet to achieve this is AsyncEnumerator. Install the package and adjust the code so it looks like:

using Dasync.Collections;

using System;

using System.Linq;

using System.Net.Http;

using System.Threading;

using System.Threading.Tasks;

namespace RateLimitingClient

{

internal class Program

{

static readonly HttpClient client = new HttpClient();

static async Task Main(string[] args)

{

Thread.Sleep(5000);

await Enumerable.Range(0, 20).ParallelForEachAsync(async x =>

{

await CallServer(x);

}, maxDegreeOfParallelism: 5);

Console.WriteLine("All done");

Console.ReadLine();

}

static async Task CallServer(int iterationCount)

{

try

{

var result = await client.GetAsync("https://localhost:7234/WeatherForecast");

var content = await result.Content.ReadAsStringAsync();

Console.WriteLine($"{iterationCount}: Normal: {content}");

await Task.Delay(20000);

}

catch (Exception ex)

{

Console.WriteLine($"{iterationCount}: Error: {ex}");

}

}

}

}

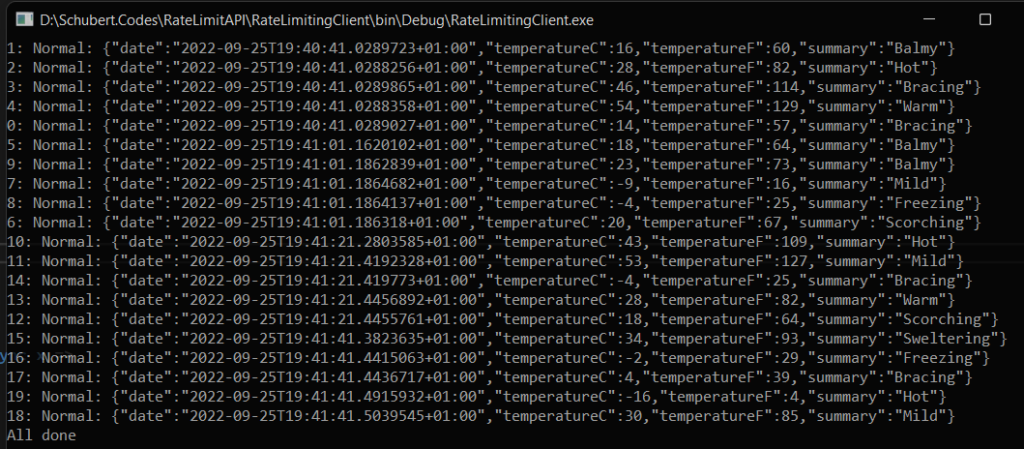

We set the maximum degree of parallelism to 5 and this is did the job. We can see the 1st 5 request ran in parallel. As soon as one of them completes, the 6th request starts and now the total run took just a minutes. This worked out really well as we didn’t hit the server limit and client make the request as soon as. We can also observer that the iteration count are not in order but all 20 request were made as quickly as possible

Client side rate limiting – SemaphoreSlim

In the previous run, the maximum degree of parallelism was the rate limiter and keep the client requests under control. Another approach is to use a semaphore. .Net provider 2 options when it comes to semaphores, Win32 Semaphore, a heavy weight object that gets created at the OS level and can be shared across applications and SemaphoreSlim, a light weight object that stays within the current application and provides all the desired semaphore behaviors. A couple of small adjustments and your code should look like:

using Dasync.Collections;

using System;

using System.Linq;

using System.Net.Http;

using System.Threading;

using System.Threading.Tasks;

namespace RateLimitingClient

{

internal class Program

{

static readonly HttpClient client = new HttpClient();

static SemaphoreSlim semaphoreSlim = new SemaphoreSlim(5);

static async Task Main(string[] args)

{

Thread.Sleep(5000);

await Enumerable.Range(0, 20).ParallelForEachAsync(async x =>

{

await semaphoreSlim.WaitAsync();

await CallServer(x);

semaphoreSlim.Release();

});

Console.WriteLine("All done");

Console.ReadLine();

}

static async Task CallServer(int iterationCount)

{

try

{

var result = await client.GetAsync("https://localhost:7234/WeatherForecast");

var content = await result.Content.ReadAsStringAsync();

Console.WriteLine($"{iterationCount}: Normal: {content}");

await Task.Delay(20000);

}

catch (Exception ex)

{

Console.WriteLine($"{iterationCount}: Error: {ex}");

}

}

}

}

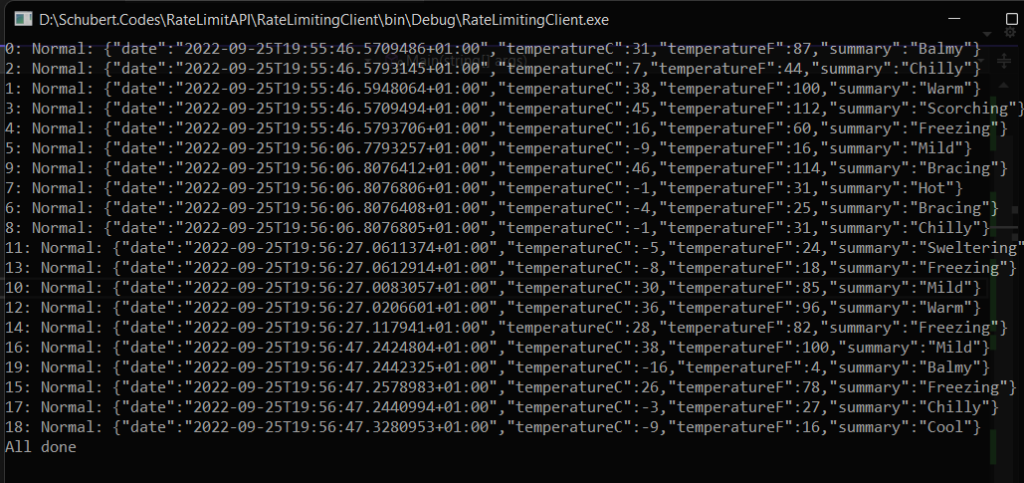

Notice how the Parallel ForEach no longer has the maximum degree of parallelism but instead we use the semaphore to control the number of request. The end result is similar, again we can see the iteration count are not in order and all 20 request were made as soon as possible by the client without hitting the rate limit of the server

Client side rate limiting – System.Threading.RateLimiting

In my quest to find other way to achieve this, I stumbled on another nuget package, System.Threading.RateLimiting. It was in beta the last time I looked at it but now has a release candidate, 7.0.0.rc.1.22426. In this package is a class called FixedWindowRateLimiterOptions. This can be used to limit the number of request in a time window. It also has a class called SlidingWindowRateLimiter which can be used to limit the number of request using a sliding time window. Can read more about it here. The updated code looks like:

using Dasync.Collections;

using System;

using System.Linq;

using System.Net.Http;

using System.Threading;

using System.Threading.RateLimiting;

using System.Threading.Tasks;

namespace RateLimitingClient

{

internal class Program

{

static readonly HttpClient client = new HttpClient();

static async Task Main(string[] args)

{

Thread.Sleep(5000);

var limiterOption = new FixedWindowRateLimiterOptions();

limiterOption.PermitLimit = 5;

limiterOption.QueueProcessingOrder = QueueProcessingOrder.OldestFirst;

limiterOption.QueueLimit = 20;

limiterOption.Window = TimeSpan.FromSeconds(25);

var fixedWindowRateLimiter = new FixedWindowRateLimiter(limiterOption);

await Enumerable.Range(0, 20).ParallelForEachAsync(async x =>

{

var limiter = await fixedWindowRateLimiter.AcquireAsync();

if (limiter.IsAcquired)

{

await CallServer(x);

limiter.Dispose();

}

});

Console.WriteLine("All done");

Console.ReadLine();

}

static async Task CallServer(int iterationCount)

{

try

{

var result = await client.GetAsync("https://localhost:7234/WeatherForecast");

var content = await result.Content.ReadAsStringAsync();

Console.WriteLine($"{iterationCount}: Normal: {content}");

await Task.Delay(20000);

}

catch (Exception ex)

{

Console.WriteLine($"{iterationCount}: Error: {ex}");

}

}

}

}

And the outcome is similar to a semaphore:

Client side rate limiting – Polly BulkheadAsync

The last option I want to explore is Polly. It is an awesome library for web request retries and handling timeouts. The Bulkhead option allows us to specify the maximum degree of parallelisation and max queue size. Add the Polly nuget package and update a few lines of code to get the below:

using Dasync.Collections;

using System;

using System.Linq;

using System.Net.Http;

using System.Threading;

using System.Threading.Tasks;

namespace RateLimitingClient

{

internal class Program

{

static readonly HttpClient client = new HttpClient();

static async Task Main(string[] args)

{

Thread.Sleep(5000);

var policy = Polly.Policy.BulkheadAsync(5, 20);

await Enumerable.Range(0, 20).ParallelForEachAsync(async x =>

{

await policy.ExecuteAsync(() => CallServer(x));

});

Console.WriteLine("All done");

Console.ReadLine();

}

static async Task CallServer(int iterationCount)

{

try

{

var result = await client.GetAsync("https://localhost:7234/WeatherForecast");

var content = await result.Content.ReadAsStringAsync();

Console.WriteLine($"{iterationCount}: Normal: {content}");

await Task.Delay(20000);

}

catch (Exception ex)

{

Console.WriteLine($"{iterationCount}: Error: {ex}");

}

}

}

}

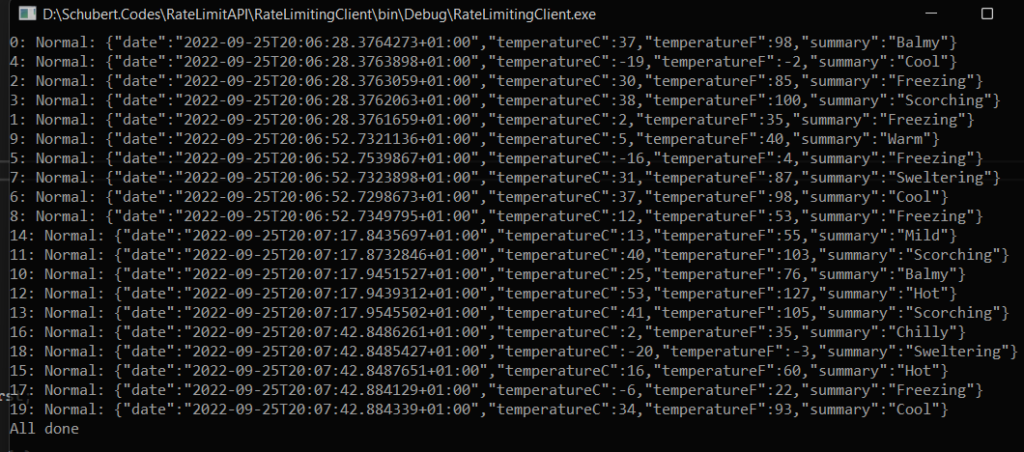

and the outcome of this is good too. Can see the iteration count is not in order and all 20 request were made as soon as possible

Final thoughts

.Net6 now provides async parallel foreach out of the box. You can read more about it here. I’ve used AsyncEnumerator nuget package to perform the loops async. This is a good option for applications pre .Net6.

In production code, consider using IHttpClientFactory instead of re-using a single instance of HttpClient, There is a good article explaining it here

Until next time, Happy Coding!